xgboost time series forecast in R

xgboost, or Extreme Gradient Boosting is a very convenient algorithm that can be used to solve regression and classification problems. You can check may previous post to learn more about it. It turns out we can also benefit from xgboost while doing time series predictions.

Example is in R language.

Contents

HOW TO

data

As I will be using xgboost and caret R packages, I need my data to be provided in a form of a dataframe. Let’s use economics dataset from ggplot2 package.

There are several time related columns, but for this time series forecast I will use one – unemploy.

|

1 |

data <- economics %>% dplyr::select(date, unemploy) |

Now I will generate index values for my future forecast. Let that be a 12 months prediction.

|

1 2 3 4 |

extended_data <- data %>% rbind(tibble::tibble(date = seq(start = lubridate::as_date("2015-05-01"), by = "month", length.out = 12), unemploy = rep(NA, 12))) |

Now my extended dataframe already has the dates specified for the forecast, with no values assigned.

Now we need to take care of the date column. xgboost does not tackle date columns well, so we need to split it into several columns, describing the granularity of the time. In this case months and years:

|

1 2 3 4 |

extended_data_mod <- extended_data %>% dplyr::mutate(., months = lubridate::month(date), years = lubridate::year(date)) |

Now we can split the data into training set and prediction set:

|

1 2 3 |

train <- extended_data_mod[1:nrow(data), ] # initial data pred <- extended_data_mod[(nrow(data) + 1):nrow(extended_data), ] # extended time index |

In order to use xgboost we need to transform the data into a matrix form and extract the target variable. Additionally we need to get rid of the dates columns and just use the newly created ones:

|

1 2 3 4 5 6 |

x_train <- xgboost::xgb.DMatrix(as.matrix(train %>% dplyr::select(months, years))) x_pred <- xgboost::xgb.DMatrix(as.matrix(pred %>% dplyr::select(months, years))) y_train <- train$unemploy |

xgboost prediction

With data prepared as in a previous section we can perform the modeling in the same manner as if we were not dealing with the time series data. We need to provide the space of parameters for model tweaking. We specify the cross-validation method with number of folds and also enable parallel computations.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

xgb_trcontrol <- caret::trainControl( method = "cv", number = 5, allowParallel = TRUE, verboseIter = FALSE, returnData = FALSE ) xgb_grid <- base::expand.grid( list( nrounds = c(100, 200), max_depth = c(10, 15, 20), # maximum depth of a tree colsample_bytree = seq(0.5), # subsample ratio of columns when construction each tree eta = 0.1, # learning rate gamma = 0, # minimum loss reduction min_child_weight = 1, # minimum sum of instance weight (hessian) needed ina child subsample = 1 # subsample ratio of the training instances )) |

Now we can build the model using the tree models:

|

1 2 3 4 5 6 7 |

xgb_model <- caret::train( x_train, y_train, trControl = xgb_trcontrol, tuneGrid = xgb_grid, method = "xgbTree", nthread = 1 ) |

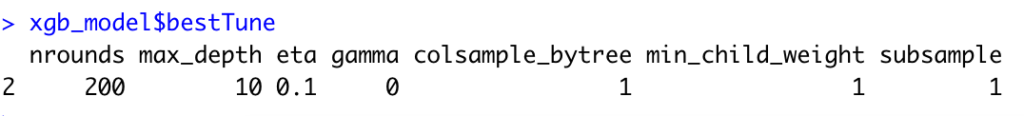

Let’s check the best values that were chosen as hyperparameters:

|

1 |

xgb_model$bestTune |

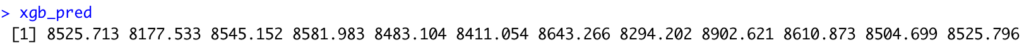

And perform the forecast:

|

1 |

xgb_pred <- xgb_model %>% stats::predict(x_pred) |

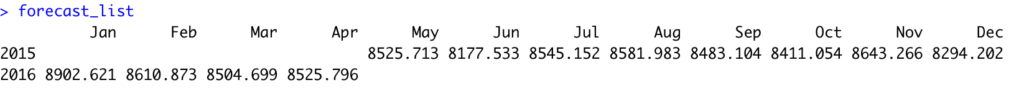

forecast object

As we have the values predicted, we can turn the results into the forecast object, as we would get if using the forecast package. That will allow i.e. to use the forecast::autoplot function to plot the results of the prediction. In order to do so, we need to define several objects that build a forecast object.

|

1 2 3 4 5 6 |

# prediction on a train set fitted <- xgb_model %>% stats::predict(x_train) %>% stats::ts(start = zoo::as.yearmon(min(train$date)), end = zoo::as.yearmon(max(train$date)), frequency = 12) |

|

1 2 3 4 5 |

# prediction in a form of ts object xgb_forecast <- xgb_pred %>% stats::ts(start = zoo::as.yearmon(min(pred$date)), end = zoo::as.yearmon(max(pred$date)), frequency = 12) |

|

1 2 3 4 5 |

# original data as ts object ts <- y_train %>% stats::ts(start = zoo::as.yearmon(min(train$date)), end = zoo::as.yearmon(max(train$date)), frequency = 12) |

|

1 2 3 4 5 6 7 8 9 10 |

# forecast object forecast_list <- list( model = xgb_model$modelInfo, method = xgb_model$method, mean = xgb_forecast, x = ts, fitted = fitted, residuals = as.numeric(ts) - as.numeric(fitted) ) class(forecast_list) <- "forecast" |

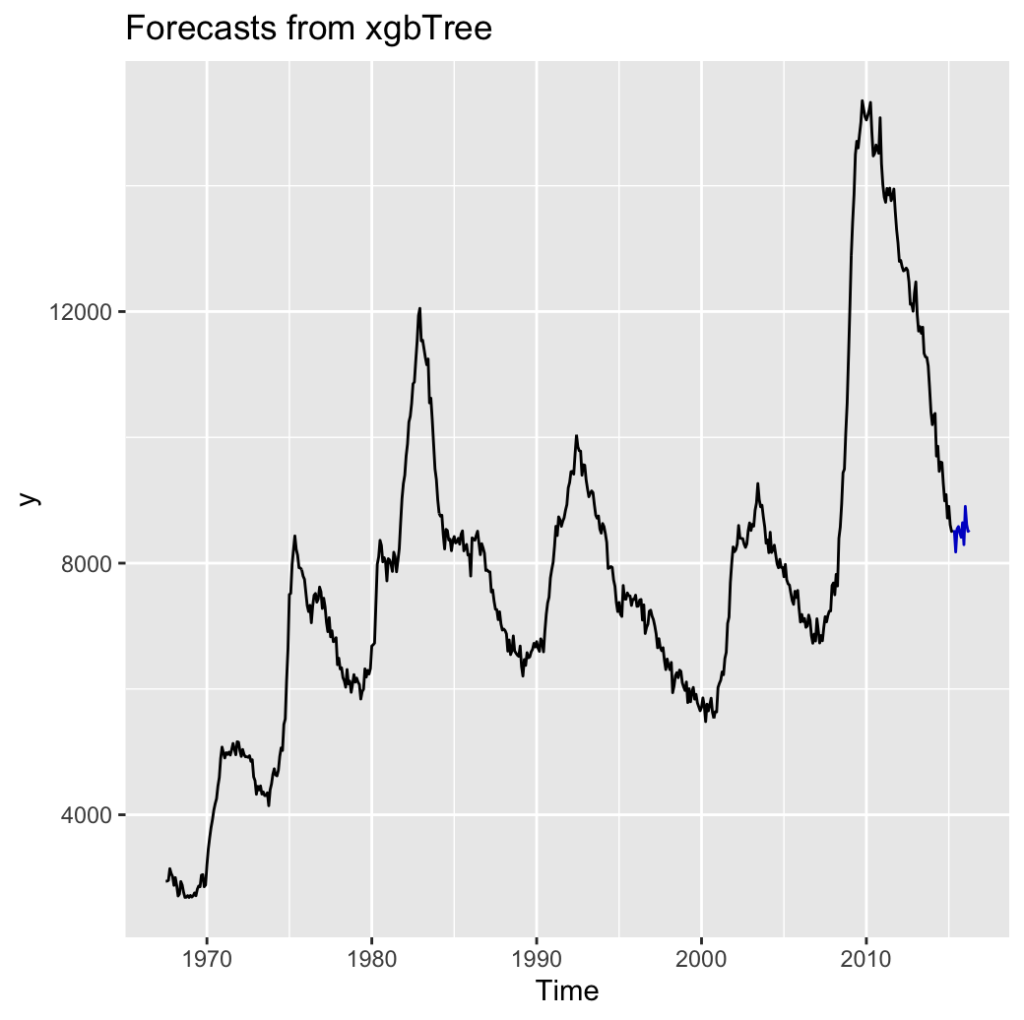

Now we can easily plot the data

|

1 |

forecast::autoplot(forecast_list) |

xgboost forecast with regressors

Nice thing about forecasting with xgboost is that we can use different regressors with our time series easily. To do so, we just need to extend the xgboost data matrices properly.

|

1 2 3 4 5 6 7 8 |

x_train <- xgboost::xgb.DMatrix(cbind( as.matrix(data %>% dplyr::select(months, years)), reg_train)) x_pred <- xgboost::xgb.DMatrix(as.matrix(pred %>% dplyr::select(months, years)), reg_pred)) y_train <- data$value |

Of course we need to make sure that the dates of our predictors are aligned with the initial time series dataset.

Looking into predicting stocks. I have tried out the prophet package for time series which is good. Had no luck with adding regressors. This example is definitely helpful. Do you have any other suggestions on predicting time series with regressors? I know you mentioned arima, but wondering if you had other suggestions.

Why does the forecast period (blue) look like a copy of the last observed period (black)?

Because the data was randomly generated. I changed the data to make example more meaningful.

Does forecasting even if the data is less than 1 year into history?

in principle yes, depends on data granularity and of course the more points the more sensible prediction may be expected.

Is there an easy way to convert this solution to be working on weekly data (or even daily) ??

I have tried changing the frequency of the ts objects (created in the fitted and xgb_forecast variables) to a weekly frequency or even tried to convert the xts objects to a ts without considering a freq but had no luck…

Yes! The key is to specify your artificial time related columns properly. If daily data – daily granularity, if weekly data – weekly granularity ect.

For some daily dataset:

extended_data_mod <- extended_data %>%

dplyr::mutate(.,

days = lubridate::day(Date),

months = lubridate::month(Date),

years = lubridate::year(Date))

Then for the fitted values and prediction you need to pass daily index:

fitted <- xgb_model %>%

stats::predict(x_train) %>%

stats::ts(start = c(lubridate::year(min(train$Date)), lubridate::yday(min(train$Date))),

end = c(lubridate::year(max(train$Date)), lubridate::yday(max(train$Date))),

frequency = 365)

xgb_forecast <- xgb_pred %>%

stats::ts(start = c(lubridate::year(min(pred$Date)), lubridate::yday(min(pred$Date))),

end = c(lubridate::year(max(pred$Date)), lubridate::yday(max(pred$Date))),

frequency = 365)

Similarly for any other data granularity.

xgb_model <- caret::train(

x_train, y_train,

trControl = xgb_trcontrol,

tuneGrid = xgb_grid,

method = "xgbTree",

nthread = 1

)

For this line it gives the following error :

Error in

[.xgb.DMatrix(x, 0, , drop = FALSE) :unused argument (drop = FALSE)

that is an issue with caret package, introduced in some new version – try downgrading.

I have an error every time in this part:

xgb_model = train(

x = x_train,

y = y_train,

trControl = xgb_trcontrol,

tuneGrid = xgb_grid,

method = “xgbTree”,

nthread = 1

)

error: Error in

[.xgb.DMatrix(x, 0, , drop = FALSE) :unused argument (drop = FALSE)

Maybe some had the same one?

Version 6.0-89 has worked for me

Well done!

How to downgrade caret, I could not find any instructions

xgb_model <- caret::train(

x_train, y_train,

trControl = xgb_trcontrol,

tuneGrid = xgb_grid,

method = "xgbTree",

nthread = 1

)

For this line it gives also the following error :

Error in [.xgb.DMatrix(x, 0, , drop = FALSE) :

unused argument (drop = FALSE)

Try downgrading caret package – this is a known issue and apparently still not fixed.

Why is the predicted value of the test set the same as the fitting value of the last year of the training set? Is there a solution?

anyone has old version caret package in binary? like .zip and for UCRT

I have downgraded caret, but it could not work for me:

xgb_pred % stats::predict(x_pred)

> xgb_pred % stats::predict(x_pred)

Error in

[.xgb.DMatrix(newdata, , colnames(newdata) %in% object$finalModel$xNames, :unused argument (drop = FALSE)

Thanks for your tutorial. For the record, the xgb.DMatrix error can be avoided by removing the xgb.DMatrix conversion. instead, simply convert to classic R matrix : as.matrix(pred %>% dplyr::select(months, years)).

Only need to do so for train and pred variables and everything will work fine.

Muchas gracias jons2580, llevaba mas de 2 meses intentando solucionar el error

Error en [.xgb.DMatrix(x, 0, , drop = FALSE):

argumento no utilizado (drop = FALSE)

y con tu indicación logré superar ese error y seguir adelante con el proyecto.

Una recomendación, si bien es cierto que podemos reemplazar la matriz de xgboost (xgb.Dmatrix) con la matriz tradicional (as.matrix), es importante mantenarla de la siguiente manera

test_Dmatrix %

dplyr::select(months,years) %>%

as.matrix(pred %>% dplyr::select(months, years)) %>%

xgb.DMatrix()

Mil gracias de nuevo

Hello

I got this error:

WARNING: src/c_api/c_api.cc:935:

ntree_limitis deprecated, useiteration_rangeinstead.How can I solve it?

Thank you

If I wanted to calculate the MSE,MAPE and such metrics. How can I do that?

Hi

Does the model with regressors expect that we have the data of regressors available for the future? intuitively it seems so but am I missing something?

Hello,is this an irregular time series? Date ranges don’t look the same? If so, does this mean that XGBoost can also be used on irregular time series?